For the Drupal community, January is the month when the candles are blown out: today, January 15, 2026, we celebrate 25 years of a technology that has shaped the web. 🎂

But in Open Source, the best way to honor a project is to build its future and tell its story. What better time, then, to stop, tidy up, and share our contributions to the ecosystem?

2025 was the year when artificial intelligence went from "interesting feature" to infrastructure. A bit like the cloud a decade ago: first curiosity, then experimentation, finally inevitability. In between, a question that those working on digital platforms can no longer postpone: "How do we bring AI into production… without losing control, security, and quality?"

This is exactly where the Drupal AI Initiative comes in: a project born to transform the (very real) energy of the community into a coordinated vision, with the goal of making Drupal not just "AI-compatible," but an enterprise-grade CMS even when AI enters the heart of editorial and business workflows.

At SparkFabrik, we didn't just stand by and watch. We understood that we didn't want to be mere users of this new technology, but "makers." After all, Open Source isn't a "marketing strategy" for us—it's part of our history and our DNA.

We chose to be there, with real contributions, in areas we identified as decisive for real adoption: developer experience, governance & security (guardrails), RAG & search, agentic workflows (MCP and toolchain), enterprise integrations, and, something often underestimated, communication and community building.

Here's how we contributed to building the future of Drupal in 2025 (explained with a technical angle, but designed also for decision-makers).

And speaking of contribution: what better occasion to announce that on Saturday, January 31, we will host the Drupal Contribution Day in Milan? It's now a recurring and unmissable event in our offices, a time to meet, write code together (but not only!) and "give back" to the community. Register here to join us!

What is the Drupal AI Initiative

The Drupal AI Initiative is a strategic project aimed at integrating AI into Drupal effectively, evolving the system to position it as the best open-source "agentic CMS."

The Drupal ecosystem had been talking about AI for some time, with features, integrations, and modules that were growing and providing a taste of what was possible. However, it became clear that to bring real strategic impact, it was necessary to go beyond fragmented contributions and channel efforts.

With this awareness, the Drupal AI Initiative was launched on June 9, 2025, with the goal of bringing structure, strategy, and shared direction to innovation (and a common vision of AI that supports people, is secure, and fully governable even in enterprise environments). In other words: not just "AI modules," but a common direction, a framework, and an ecosystem capable of growing without losing governability.

For decision-makers (CTOs, CEOs, digital managers, marketing leads), this point is huge: it means being able to bring AI into digital experiences without vendor lock-in, without having to rebuild everything from scratch, and with the governance backbone typical of Drupal.

First of all: who contributed (people, not just code)

Innovation is driven by people. Our contribution wouldn't have been possible without a significant structural investment: since June 2025, we've dedicated 50% of the working time of two of our best technical talents exclusively to the Drupal AI Initiative.

We're not talking about spare time, but constant, daily commitment. An amount of resources justified by our internal strategic vision, and often further fueled by personal passion that goes well beyond office hours.

|

Contributor |

Role & Focus |

|---|---|

|

Lead Developer & Architect. Drupal contributor and speaker, with experience in modules and advanced integrations (including WebProfiler, Monolog, Symfony Messenger, Search API Typesense). He worked on infrastructural foundations (Runner, Guardrails) and complex architectures (DDEV development environment). |

|

|

Senior Developer & Maintainer. Contributor on various modules and integrations (Iubenda, Search API Typesense, Panther, TMGMT Lara Translate, MCP Client). He focused on interoperability (MCP), RAG, and vertical integrations. |

The team's prior experience was fundamental, because many of our 2025 contributions weren't theoretical exercises, but technical choices made by those who have already seen what works (and what breaks) when a project has to live in the real world.

How we worked: governance, consistency and alignment

Guiding the strategic vision was our CTO, Paolo Mainardi, who coordinated activities with precise methodology to ensure we produced value continuously (not only for the ecosystem and community, but also to address the real enterprise challenges of our clients).

In 2025, we worked in sprints, with dedicated issues and milestones, aligning with internal weekly coordination calls. Additionally, we always participated in the community's weekly asynchronous alignments to bring our perspective. This model allowed us to:

- choose priorities and "cut" what didn't bring value

- explore and deepen various solutions, beyond contributions

- define and evolve PoCs into reusable solutions

- maintain a constant flow of contributions, despite the pressure of commercial projects

- create a stable bridge between technical work and communication.

Regarding the last point, an important aspect was working together with the marketing team: Stefano Mainardi (CEO) and Alessandro De Vecchi worked constantly to tell the story of Drupal, the AI Initiative, and what we were building.

Because open source really works when you build and share.

AI Development Environment in Drupal: our DDEV add-on to lower the barrier to entry (DDEV development environment)

The first major challenge we faced was infrastructural. In June, at the beginning of the Drupal AI Initiative, contributing to development was complex and slowed down by a fundamental bottleneck in terms of Developer Experience (DevEx).

Our first major contribution was therefore a DDEV-based development environment, designed to make local setup replicable and fast, and to allow working on multiple modules simultaneously without going crazy.

More specifically, the main problem was the complexity in configuring a local development environment to work effectively in a context like Drupal AI, where you need to work and test multiple modules simultaneously. Dependency management was decidedly inflexible (with the risk of having to reinstall them every time), only one project at a time could be cloned (making many manual git clones necessary when working on multiple modules), and the need to add (and maintain) some DDEV-specific configuration files made everything tedious and not scalable.

Our effort was therefore directed at lowering these barriers to entry and accelerating the innovation cycle for the entire community, providing developers with a pre-configured AI local development environment working in very short time.

Our strategy evolved in two phases, with a general and a specialized solution. The first step was the proposal of a new DDEV add-on (DDEV Drupal Suite add-on), a generic tool that can be used by any contrib module, drastically simplifies setup, and makes contributing to multiple modules simultaneously streamlined.

Based on this, we first developed a "Drupal recipe" (AI Dev Recipe) that installs and configures a minimal set of AI modules, then also an interactive CLI wizard (DDEV Drupal AI Add-on) that orchestrates the entire AI functionality configuration, automatically manages dependencies, config and install, and is also easily extensible with simple YAML instructions.

Furthermore, development continued to make the solution even more flexible, for example including support for ready-to-use vector databases (PostgreSQL, pgvector), but also optional support for high-performance scenarios (Milvus) and quality assurance tools (GrumPHP).

Today, this solution is used by the global community and is traceable as an add-on. In parallel, to further facilitate adoption, we also proposed transferring it to the official DDEV namespace, so as to make it the absolute standard for AI development in Drupal. It was one of our first contributions, but it laid the foundation for everything else.

Why does this contribution matter for business too? Because when AI enters an enterprise CMS, the impact is as much functional as organizational. The speed with which a team can do tests, fixes, iterations, and releases determines the real time-to-market. And a standardized development environment is often a first, powerful productivity multiplier.

Guardrails: bringing security, governance, and control to Drupal AI

Every time we talk about AI in production, sooner or later we get here: trust.

AI can create content, summarize, classify, orchestrate actions, call external tools. But it can also "hallucinate" (with great confidence), deviate from company policies, expose sensitive data, generate unsafe or inappropriate output.

Think for example: how can we ensure that a chatbot doesn't provide inappropriate responses, suggesting a competitor's product, offending the user, or inventing false information? And how can we prevent sensitive data (PII) from being sent to third-party providers (through our prompts, or through information collected by autonomous agents)?

The risks are real and, in the enterprise world, an AI that "hallucinates" or responds inappropriately isn't just a bug, it's an unacceptable reputational risk. And if it shares sensitive data, it's an even more serious violation. While many focused on text generation, we focused on control.

For this reason, we worked on defining so-called "Guardrails", intelligent rules that control and guide AI behavior, effectively limiting it to ensure it respects the guidelines, values, and objectives of each organization.

In our vision, guardrails are not optional: they are a strategic requirement to make AI deployable in real contexts.

Definition of guardrails in Drupal AI

Luca's contribution on this front was substantial, starting with the definition itself of the concept of Guardrails in Drupal (which revealed a much greater breadth than initially hypothesized).

- Guardrails are in fact necessary as a cross-cutting layer for all interactions with LLMs: modules, agents, chatbots, content generators, etc. Importantly, guardrails are also essential for securing communications with other external systems, protecting the exchange of parameters and sensitive data, such as those managed through MCP.

- They must analyze both user input and sanitize data before it reaches the LLM (e.g., removing personal data or blocking forbidden topics), as well as AI output, analyzing the response before it's shown to the user.

- In case of a failed check, a guardrail can completely block the execution of a request ("Sorry, I can't respond due to policy violation") or rewrite input/output by removing problematic data ("Here's the response omitting personal information").

- A single filter is typically not sufficient. Rather, a "Set of Guardrails" is needed that operate simultaneously, each specialized in different types of controls (e.g., one dedicated to personal information, one to forbidden content, one dedicated to user permissions).

Our contribution culminated in the creation of a new plugin architecture to manage guardrails. The solution is highly customizable: it not only supports the configuration of individual guardrails, but also the ability to combine multiple controls in different sets.

Both controls on user inputs (pre-LLM controls) and on AI output (post-LLM controls) are supported, and it's possible to define different checks in the two phases, for different needs. Last but not least, guardrails of two distinct types are implemented: deterministic type (regex) and non-deterministic type (LLM-based topic detection).

For decision-makers, the value of this contribution is immense: guardrails transform Drupal AI from a promising technological experiment to a reliable, secure, and enterprise-ready platform, where AI always works for the business, and never against it.

Support for Bedrock and data sovereignty

During experiments, we explored the main Guardrails solutions already available, with a particular focus on AWS Bedrock, one of the most powerful solutions on the market.

Our tests confirmed excellent compatibility with many languages (including Italian), particularly the PII masking functions, specific guardrails for removing personal information without blocking execution.

But it's important to tell the reality honestly: an external (extra-European) service introduces privacy implications, compliance questions, and technological dependence (vendor lock-in).

These concerns are particularly relevant in regulated contexts (or in public administration), where it must be seriously evaluated which data is transmitted externally, how it's processed, what residual risk remains.

For this reason, while supporting Bedrock, our architecture is designed to be agnostic. It allows integrating other solutions in the future (such as Azure, Google Cloud, Guardrails.ai) and, a very interesting alternative, also local models, to ensure data sovereignty.

Streaming, guardrails, evolutions

Another technical point we addressed is the complexity of guardrails in the presence of streaming responses, i.e., when the AI sends each piece of the response as it generates it, and not just the complete output at the end of generation.

For user experience, streaming is a highly appreciated feature. However, in the presence of streaming, it's not enough to perform a final output check, because the user might already have been exposed to inappropriate information. Rather, guardrail control must be performed at each update of the response from the LLM (at each new output token).

Validating output token-by-token, managing tool calls in the flow, and simultaneously activating guardrail sets at each update is a non-trivial issue.

The temporary solution was to disable streaming when guardrails are active, pending a more robust approach. It's an important detail for usability, moving from a "safe" solution to "safe and pleasant to use."

And there's another front that will need to be addressed in 2026: guardrails for multimedia content (images, videos). It's a topic still to be explored in depth, but we already know it can't be left to chance.

AI Agents in production: asynchronous tasks and streaming with Symfony Messenger (to overcome PHP limitations)

AI Agents are a completely different entity from simple chatbots of the past. A complex agent plans, executes multiple steps, calls tools, handles errors, queries databases, processes data. In short, Agents require time to "reason," with processes that can last even several minutes.

And this is where the classic synchronous model of a PHP web request shows its limits: timeouts, sessions that close, UI that interrupts execution, difficulty with retry/monitoring.

The problem is therefore: how do we run complex agents on a Drupal site, without the page timing out? A real problem, which also emerged on a project for one of our clients.

To address this limitation, we developed a solution and a PoC of "AI Agent Runner" based on Symfony Messenger, with two clear objectives:

- asynchronous execution of agentic tasks (robust, retryable, monitorable), decoupling agent execution from the user's web request.

- streaming of responses to the frontend when a fluid conversational experience is needed, while continuing to support synchronous execution with (almost) the same code.

This contribution was also brought to a public context: a technical webinar organized together with the team coordinating the Drupal AI Initiative, in which Luca showed how Symfony Messenger can become a key piece to overcome the architectural limitations of Drupal and PHP when talking about agents.

For a decision maker, the point is very simple: with synchronous execution alone, agents remain beautiful but limited and fragile prototypes. With an asynchronous architecture, automation can become complex and reliable.

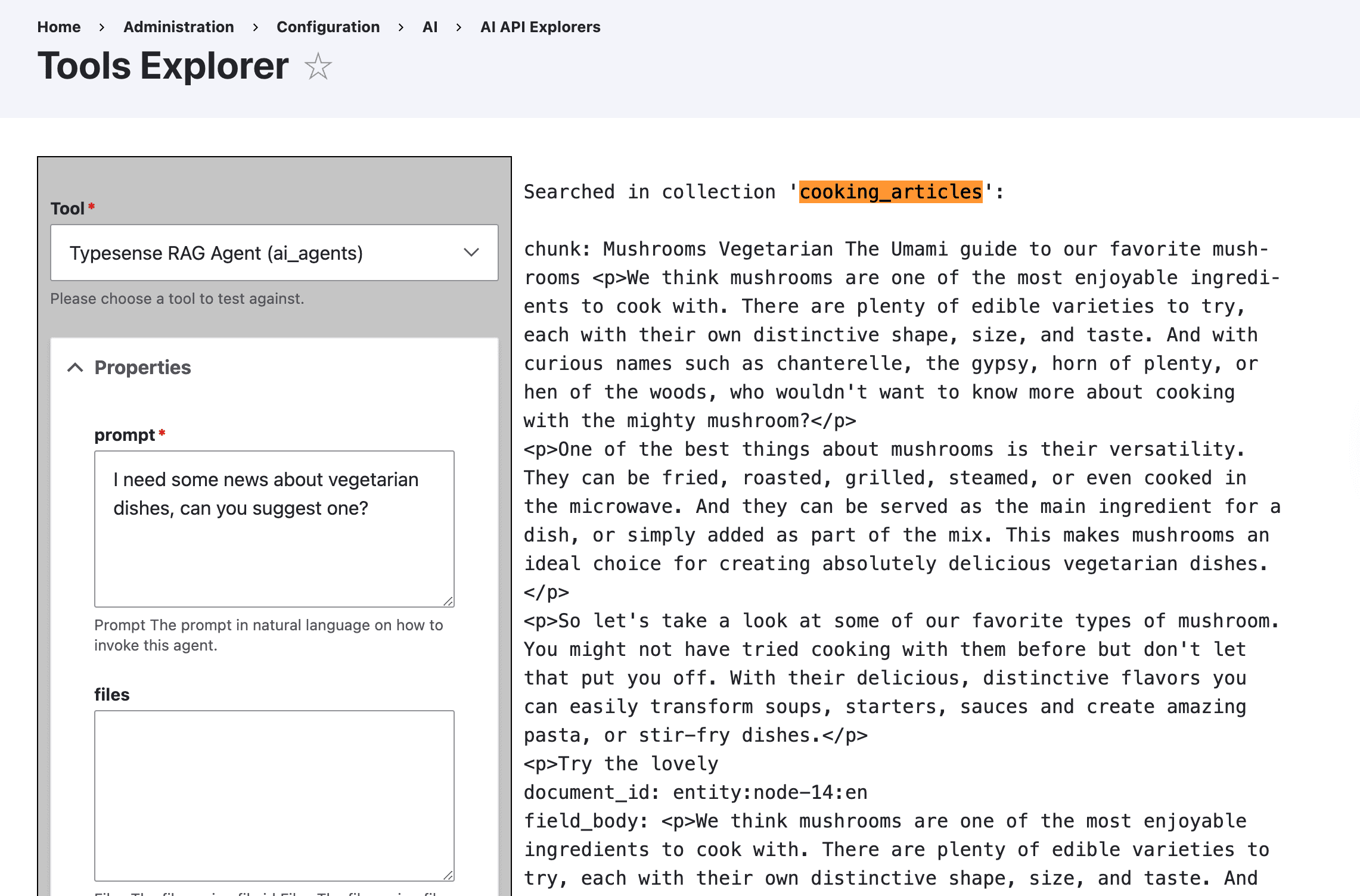

RAG and Typesense

One of the quickest ways to lose trust in AI is to ask it something it should know… and see a plausible but wrong answer. RAG (Retrieval-Augmented Generation) was born for this: to anchor responses to a real and controllable knowledge base.

SparkFabrik has historically been the maintainer of the Search API Typesense module, which has given us deep experience both in "classic" search and in the evolution towards semantic search and vector databases. With the advent of AI, it was natural for us to evolve this tool.

Roberto Peruzzo worked on implementing Typesense support for RAG in the AI module, with an approach aimed at improving relevance and efficiency.

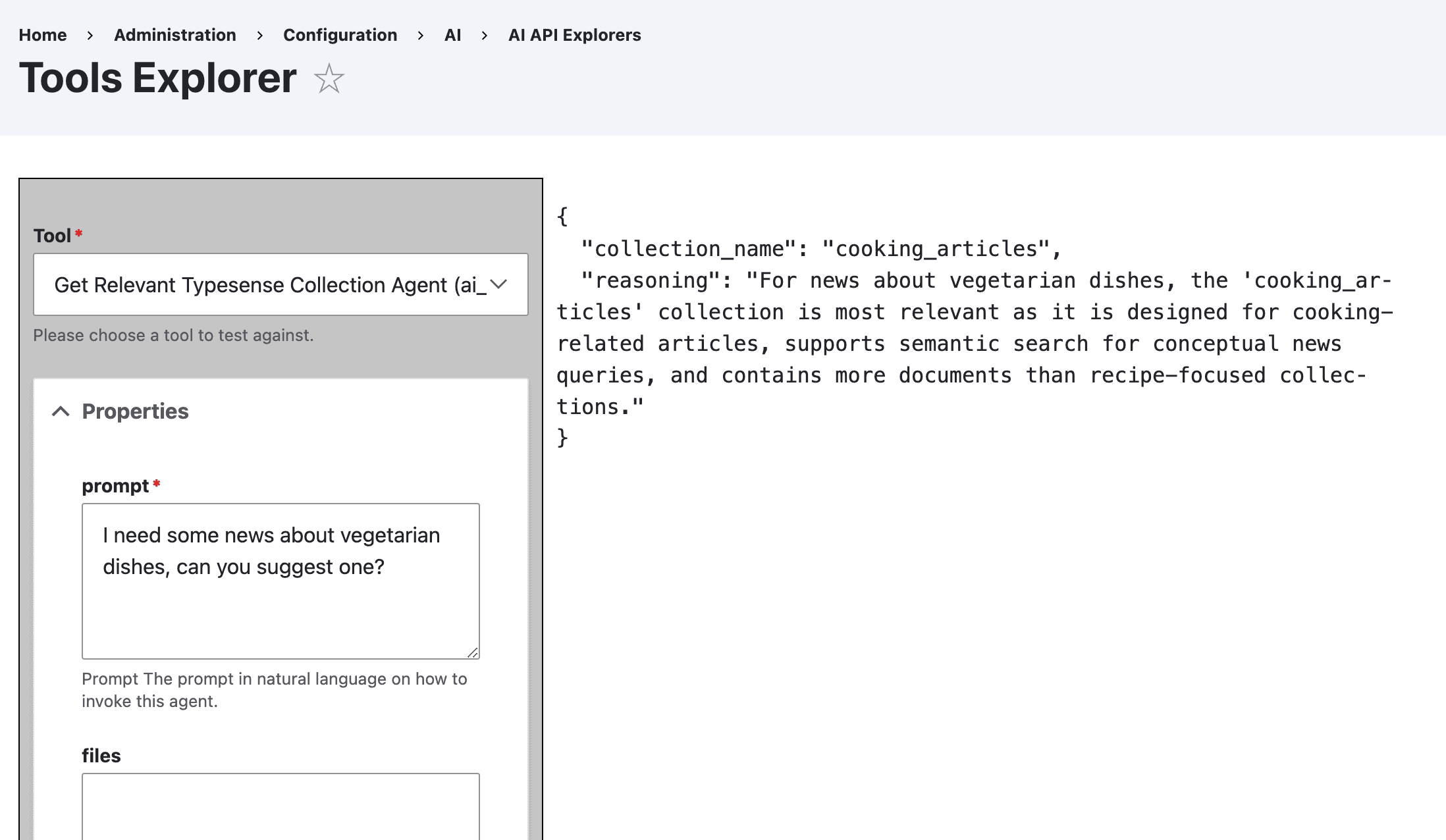

One of the most interesting elements we introduced is the concept of "Router Agent," a sub-agent that determines "where to search." In a complex system with many indexes (e.g., Technical Manuals, Blog, Products), querying everything is inefficient. Given a user input, the main agent activates the su-agent, which selects the most relevant collection to query on Typesense (based on intent), before formulating the final response.

This reduces "noise" (irrelevant data sent to AI) and hallucinations, lowers token costs, and drastically increases response accuracy. Furthermore, dynamic routing avoids having to hardcode the mapping of collections and adding new collections does not require new code.

In short, you get a better user experience, scalable code and cost savings.

MCP: Drupal becoming context and toolchain for agents

MCP (Model Context Protocol) is one of the "pieces" that make Drupal conversant with external systems, tools, and agents in a standardized way. It's one of the next big frontiers, and in 2025 we worked and contributed on MCP in multiple phases.

Exploration and direction

We started with an exploration of the state of the Drupal MCP ecosystem: understanding what was already possible, what was missing, and where it made sense to invest to generate value also for our real projects. In this phase, we also hypothesized the use of JsonAPI via MCP to build decoupled frontends.

PoC: generating a React frontend via JSONAPI + Tools

After exploration, we carried out a more concrete experiment with the goal of testing the effectiveness of the approach and the potential of the protocol: creating a complete React app through an AI connected to the Drupal MCP server, capable of reading its structure and content (content types, fields…).

Realized through a very detailed prompt divided into different phases ("spec-driven development"), the experiment was a success: it demonstrated that Drupal can expose its structure ("intelligence") to external systems, allowing AI to act not only as a text generator, but as a junior developer guided by the CMS context (and supervised).

Brainstorming on concrete use cases and work in progress

Subsequently, we did an internal brainstorming to define solid and repeatable use cases, for different stakeholders. Two emerged:

- for Drupal developers: starting from an SDC model and generating entities, paragraphs, and fields via MCP

- for frontend developers/site builders: building a decoupled frontend using only the MCP server

We chose to deepen the first use case, still ongoing today with exploration on automating backend structure generation starting from SDC templates.

Improving MCP in Drupal and becoming maintainer

In parallel, we also contributed directly to improving the implementation of MCP in Drupal.

Roberto became maintainer of the Drupal MCP Client module, modernizing it to support the new Tool API and improving security with support for authentication headers (such as bearer token). Last but not least, Roberto also contributed bugfixes to the Drupal MCP module to make Drupal an MCP server.

Why does MCP matter also for those looking at business? Because it's an "enabling" technology. It's what allows agents to not just be conversational interfaces, but operators that know how to use tools, interact with APIs, execute actions, and automate processes.

Lara Translate Integration: enterprise AI translations, superior multi-lingual content

Translation is one of the most immediate and concrete use cases of AI in enterprise content systems: multi-country sites, catalogs, knowledge bases, documentation, compliance.

Parallel to infrastructural work, SparkFabrik responded to a specific client need by developing the TMGMT Lara Translate module. Lara is an AI model specialized in translation tasks, with qualitatively superior output to generalist LLMs (ChatGPT, Gemini, Llama…) while maintaining lexical and stylistic consistency.

The module integrates Lara as a translation provider within Drupal's TMGMT (Translation Management Tool) system, supporting all of Lara's unique features and with attention also to practical details such as effective text splitting and error handling logic.

An interesting aspect is that this contribution also triggered direct contact, opening collaboration opportunities and demonstrating how project needs can transform into valuable contributions for the community.

Beyond code: content, outreach and community

Contributing doesn't just mean writing code. It also means telling the story: explaining what we're doing, what's ready, what's evolving, and especially why it's worth investing. In 2025, we're really proud of the collaborative work between the technical and marketing teams to translate our work into shared culture.

Content: Drupal AI Logs and blog articles

We told the story of some of the contributions also on our social channels through the Drupal AI Logs series, making the contribution process transparent, transforming technical updates into usable insights and (we hope) inspiring other contributors and teams to experiment.

A great effort was also dedicated to producing blog articles dedicated to Drupal and Drupal AI (and in our tech blog), sharing our perspectives and promoting the ecosystem of our favorite CMS (in 2025 alone we shared 13 vertical articles on Drupal).

DrupalCamp Italy 2025: two talks, two complementary perspectives

In November 2025, we didn't just contribute to organizing DrupalCamp Italy. We also brought the Drupal AI Initiative to the stage, with two talks that, together, tell our approach well.

- The state of the Drupal AI Initiative.

Luca Lusso shared the overall state of the initiative and some of our contributions, offering an insider vision, realistic and hype-free. The goal: to inspire the Italian community and promote the initiative. (Talk recording and slides). - AI Agents in Drupal and MCP (with PoC)

Roberto showed how to implement AI agents in Drupal, both via UI and via code. In the talk, he showed a PoC of AI Customer Assistant for e-commerce: an agent that converses with the user, searches for products, suggests options, adds to cart, and notifies events in Slack via MCP. (Talk recording and slides).

Technical webinar: AI Agents on Symfony Messenger for "robust" AI agents

As mentioned above, we also contributed to an international technical webinar, organized with the team coordinating the Drupal AI Initiative (James Abrahams and Marcus Johansson).

Luca showed his PoC based on Symfony Messenger, demonstrating agents capable of executing asynchronous tasks and supporting message streaming (even in case of tool invocation).

The goal was to show how SM can become a key piece to overcome the architectural limitations of Drupal and PHP when talking about agents. Interestingly, in the same webinar a second demo focused on FlowDrop was shared and other community members intervened to participate in the discussion.

An extra that comes from the initiative: workshop on GitHub Copilot

In the study and R&D path related to the Drupal AI Initiative, we also invested in training and sharing on AI development tools, such as GitHub Copilot.

We shared our experience in a technical and practical workshop held by Luca Lusso, to explain in practice how to integrate Copilot as a partner in the development cycle in VS Code.

The Copilot workshop is free and freely accessible, not vertical on Drupal but intentionally more open to the development world.

A year of innovation and a look to the future

2025 closed with an extremely positive balance for the Drupal ecosystem, a year of construction in which SparkFabrik contributed to laying the fundamental bricks: a solid development environment, a security framework (Guardrails), a robust execution engine (Async Runner on Symfony Messenger), and interoperability protocols (MCP).

Under the guidance of our CTO Paolo Mainardi, our contributions focused on high-value areas and critical nodes to bring AI into real client projects, with strong emphasis on governance and security, autonomous AI agents capable of performing complex actions (from site building to configuration).

We also recognized (and promoted) the need to support completely open source and local stacks (technological agnosticism), anticipating the digital sovereignty and data privacy needs required by European enterprise clients, subject to regulations such as GDPR, AI Act, and Cyber Resilience Act).

|

Summary of main technical contributions 2025 (Drupal AI) |

||

|---|---|---|

|

Area |

Main Contribution |

Impact / Result |

|

Infrastructure |

Unified DDEV development environment |

Standardization of setup for all global contributors. |

|

Security |

Guardrail Agents Architecture |

Framework for AI input/output security, viewable and configurable. |

|

Performance |

AI Agents on Symfony Messenger: Async Runner & Streaming |

Execution of complex agents without timeouts, synchronous and asynchronous execution, reactive UX thanks to real-time streaming. |

|

Search |

RAG and Router Agent |

Intelligent system to route queries to the correct index, reducing costs and noise. |

|

Interoperability |

Drupal MCP Server & Client |

POC for generating React frontend from Drupal via AI with MCP; modernization of MCP client. |

|

Localization |

TMGMT Lara Translate Provider |

Integration of professional translation service into Drupal editorial workflow. |

Looking to 2026, our vision is clear. Drupal is no longer "just a CMS." It's positioning itself as the ideal platform for Enterprise AI: a place where data is structured, secure, and accessible, and where artificial intelligence isn't a toy just for "wow effect," but a tool governed by precise compliance and security rules. It's exactly the type of AI needed for production, not for demos.

SparkFabrik will continue to be at the forefront. We won't just use AI; we'll continue to write the code that makes it possible, open, and secure for everyone, for real projects.

At SparkFabrik, we combine deep technical expertise in Drupal with advanced skills in AI integration, composable architectures, and enterprise governance. Our Drupal development services cover the entire spectrum: from strategic consulting on the AI readiness of your current architecture, to implementation of custom AI-powered solutions, through to security, ongoing support, and optimization.

If you're evaluating how to integrate AI into Drupal (or into a broader enterprise ecosystem), and you want to do it with a partner who has really put their hands in it (at the product, community, and delivery level), let's talk.

This article is part of our series dedicated to Drupal. To explore other aspects of the platform, we invite you to consult our previous articles on features and benefits, comparison with alternatives, migration strategies, security and compliance, composable architecture, Design System, Drupal headless omnichannel, and overview and news of Drupal AI.

- Previous Post

- See all posts

- Next Post