The open source platform for automated container management can bring significant benefits, from application portability to IT resource efficiency. Here is what you need to know to seize the opportunity.

.

In recent years, Kubernetes has gained positions among the most talked-about technologies in the IT universe. Developed by Google for internal use and launched in an open source version in 2014, today the well-known platform for container orchestration is of interest to more and more companies because it allows them to automate application management, contributing to the resilience of IT services.

What is Kubernetes?

In the official documentation, Kubernetes (abbreviated to K8s or simply Kube) is defined as an open and flexible platform that allows workloads and containerised services to be managed, enabling both automation and declarative configuration.

Kubernetes is the direct evolution of Borg, the cluster management system designed by Big G and used internally. It thus inherits from its predecessor the characteristics of architecture and operation, which are continuously updated and enriched by members of the rapidly expanding international community.

The project is currently maintained by the Cloud Native Computing Foundation (CNCF), part of the non-profit organisation Linux Foundation, which brings together more than two hundred start-ups together with the world's largest Cloud solution providers. These include not only the Mountain View multinational, but also Microsoft, IBM, Amazon Web Services and Alibaba Cloud.

What is Kubernetes used for?

In ancient Greek, the noun 'Kubernetes' means 'helmsman': the parallelism with the platform's main function of 'piloting' the container ecosystem, ensuring scalability, failover and efficient deployment is therefore obvious.

In order to understand how Kubernetes intervenes in the management of the enterprise application ecosystem by conferring elasticity and continuity of services, it is first necessary to clarify the concept of containers starting from the evolution of information systems.

Evolution of IT systems and containerisation

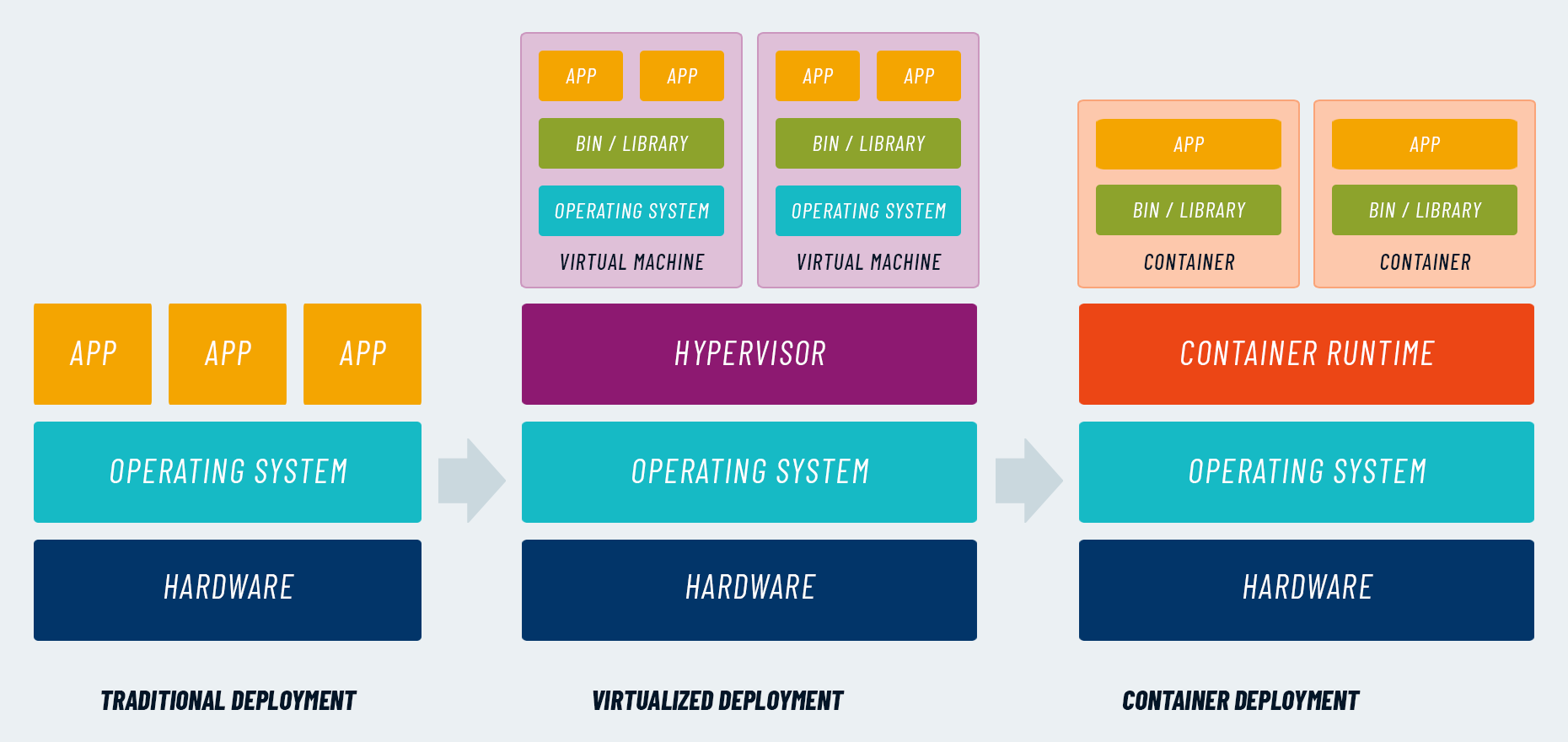

- Physical Servers

Under the traditional infrastructure model, applications run on physical servers, competing for resources without the possibility of rational allocation. If a peak of requests were to be generated, one application could take away resources without limit, compromising the functionality and performance of others running on the same server. - Virtual machines

Virtualisation has overcome this problem by adding a level of abstraction, segregation and control of assets. Applications are isolated within several virtual machines (guests) that reside on the same physical computer (host), sharing the different components. Using specific software (hypervisor), hardware resources are distributed dynamically between the guest systems, according to application needs and ensuring proper balancing. - Container

Containerisation represents a further step forward compared to virtual machines. Whereas VMs are complete systems, which abstract all components of the underlying hardware system, containers virtualise only the runtime environment, which is necessary for running applications. Containers are therefore 'lighter' than VMs and, since they are decoupled from the infrastructure, they work in any development, test or production environment. They can therefore guarantee greater portability of software across modern multi-cloud ecosystems and favour the use of microservice architectures, whereby applications are designed as a set of independent, combinable functional units.

Kubernetes simplifies container management

Despite the many advantages of containerisation, from more efficient use of resources to consistency of operation on different hardware systems, there is a downside. Containers in the order of tens of thousands can also circulate within corporate IT infrastructures and the biggest challenge is the complexity of orchestration.

Kubernetes intervenes by automating container management operations, ensuring continuity of services and efficient allocation of resources.

Going into more technical detail, Kubernetes performs a rich set of functions:

- workload balancing: in the event of traffic peaks to a container, Kubernetes allows requests to be distributed evenly among several containers while keeping the service stable;

- Dynamic resource allocation: Kubernetes can be instructed to automatically provide each container with an appropriate and predefined level of computational resources and memory;

- Automated rollout and rollback: Kubernetes can create and remove containers by rebalancing the allocated resources to achieve the desired state;

- management of critical information: Kubernetes stores, distributes and updates the configurations and sensitive data (e.g. passwords and OAuth tokens) of applications, guaranteeing high levels of security.

READ ALSO: Orchestration vs Choreography, which one should you use? The pros and cons

How does Kubernetes work?

Having clarified the purposes, to fully understand how Kubernetes works, it is necessary to delve into the main components underlying its architecture.

- Master: This is the machine that controls the Kubernetes nodes and dynamically allocates containers according to available resources and predefined parameters;

- Nodes: controlled by the master system and grouped in clusters, they represent the virtual or physical machines tasked with performing the assigned tasks;

- Pod: indicates the set of containers that reside on the same node, sharing computing, storage and network resources in addition to the IP address.

- Kubelet: this is a software agent running on the nodes which, upon receiving orders from the master machine, initiates container management according to instructions.

READ ALSO: Kubernetes Operators: what are they? Some examples

POTREBBE INTERESSARTI:

The advantages of Kubernetes

Kubernetes represents a valuable opportunity for companies that want to streamline application management within hybrid and multi-cloud ecosystems.

The use of the open source orchestration platform in fact makes it possible to achieve a number of advantages such as the possibility of orchestrating containers on different hardware systems, optimising resources while guaranteeing application performance, ensuring the proper functioning of containers and continuity of service, automating the distribution and updating of software, and setting different levels of security.

This results in five main business benefits:

- First of all, thanks to automation, the development, release and deployment processes of containerised applications are simplified and accelerated;

- Dynamic container management and intelligent resource allocation enables new efficiency gains and cuts in IT expenditure;

- The ability to rapidly scale containers and handle peak demands increases application availability and service continuity;

- The portability of containerised applications increases freedom with respect to infrastructure choices;

- Containerisation can be a shortcut to migrate legacy applications to the cloud, guaranteeing functionality and performance in any environment.

The challenges of Kubernetes

Kubernetes offers a particularly attractive range of opportunities, but before deciding on a possible adoption, one must also assess the possible criticalities of implementation and use.

The Kubernetes universe moves very quickly: continuous innovation is among the most appreciated features of open source technologies and, thanks to the large community, updates to the platform are very frequent. On the other hand, all this can be confusing for a company approaching this technology for the first time.

Kubernetes adoption initiatives can require a significant commitment in terms of time and resources. Business decision makers are not always willing to invest in new projects when budgets are barely sufficient to maintain the status quo. Moreover, IT personnel are not always inclined to embrace change and take risks.

Kubernetes is a relatively recent technology that requires specific skills that are not always present in the company. Instead, professionals with up-to-date knowledge of application development, operations management and data governance are needed for successful adoption.

TO DIVE DEEP: 3 mistakes to avoid when adopting Kubernetes

When and why to use Kubernetes

Putting pros and cons on the scales, does Kubernetes pay off? And under what circumstances? In the light of the above, containerisation and the use of the open source orchestrator can bring significant advantages to companies, in terms of technological efficiency, organisation and business benefits.

Any enterprise wishing to improve the flexibility of its application fleet, accelerate the development and release process, ensure continuity of services, and optimise IT costs, should consider the alternative of containers and Kubernetes. The option becomes particularly compelling in the case of heterogeneous and distributed ecosystems, in order to facilitate the portability of software from one environment to another.

However, the adoption path can be complex and one has to start off on the right foot, in light of a few considerations. First of all, the roadmap must be customised and gradual: it requires time and foresight, but above all it needs multidisciplinary skills and established expertise.

It also requires the ability to change mindset and organisation: containers presuppose a completely new way of designing and managing IT architecture, so the approach to work and technical knowledge must also evolve. This is why the consultancy and support of a specialised partner can make all the difference, filling the skills gap and transferring a new way of working.